This section provides an introduction on how to do evaluation and outlines general steps to take when conducting a program evaluation. While this section does contain tips and tools, they are best implemented in the context of a well-defined and specific evaluation approach, which can be chosen and designed to be appropriate to both the specific type of intervention you want to evaluate and the context in which you will evaluate it.

So, how do you do evaluation?

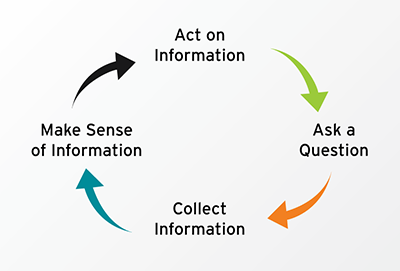

At the most basic level, evaluation involves asking a few important questions about your work, collecting information that helps you answer those questions, making sense of the information, and acting on that information.

At the most basic level, evaluation involves asking a few important questions about your work, collecting information that helps you answer those questions, making sense of the information, and acting on that information.

These steps all have more formal names, and many types of approaches to evaluation have many more specific steps. Just keep in mind that evaluation involves more than the collection of information or data. The process of evaluation starts way before you begin collecting data and does not end with analysis of the data. That is, if you do a written pre- and post-test as part of your program, that’s a tool you use in evaluation, not the entire evaluation. And if you’re looking to improve your evaluation, that process will likely involve more than just revamping the instruments you use to collect information.

Who needs to be involved?

Many approaches to evaluation give significant focus to the question of who needs to be involved in program evaluation. As with most other decisions you will need to make around evaluation, who to involve – and when to involve them – depends on many different factors.

Consider the following questions (also available as a worksheet) to help guide your brainstorming about who to include:

- Who is impacted by the program or by the evaluation?

- Who makes decisions about or can impact how the program or the evaluation is implemented?

- Whose voices are most in need of amplification during the process of evaluation planning?

- To whom will the evaluation need to speak? To whom do you need or want to tell the story of your work?

- What areas of expertise do you need to plan the evaluation? Who can you draw on for that expertise? (This question will likely need to be revisited as the evaluation takes shape.)

After you have a sense about who might need to be included, you’ll also want to consider other factors such as the following:

- What is the timeframe for planning the evaluation?

- What resources are available for planning (e.g., can you offer payment or any sort of stipend for participation in evaluation planning)?

- What is the level of buy-in for evaluation among the various groups and people named above?

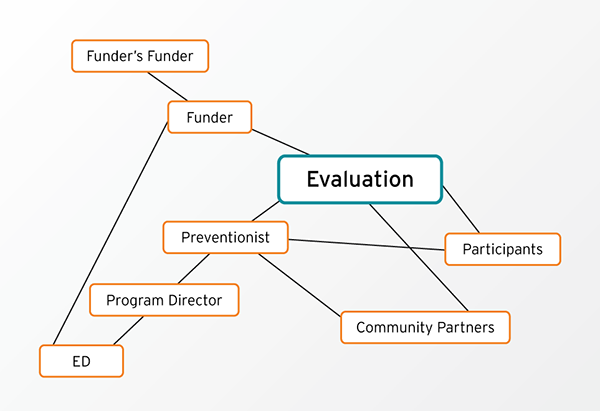

You might want to sketch out a diagram that highlights these parties, including their relationship to the evaluation and to each other. You can use the Mapping Your Evaluation System handout or draw it by hand on a blank sheet of paper. Consider the following basic chart:

This shows the primary stakeholders in many evaluations: funders, agency staff members, and participants. Additionally, if your work is collaborative, you may have community partners who are impacted by or who impact the evaluation. This diagram could expand rather quickly by taking into account the setting in which you’re working with the participants. If you’re working in a school, teachers, parents, and administrators might be added to the chart. If you’re doing norms change work within a community, then the possible stakeholders expand to include all the members of that community.

Involving stakeholders who impact and are impacted by the evaluation is part of what you do to set yourself up for success. Here are some examples of what that means:

Funders: Funders often stipulate the type of evaluation or evaluation measures that must be used for programs they fund. Having funders at the table for planning the evaluation helps you align with their requirements. Also, sometimes funders require processes or methods that are not ideal for your population or your programming. If you involve them in the process, you have a chance to advocate for changes if any are needed and the funders can see firsthand why the changes might be necessary.

Program Participants: Program participants can serve as an important reality-check to both program planning and evaluation planning processes. Since by-and-large, evaluations eventually collect data from and about a particular population, their direct input with regards to credible and culturally responsive ways to do that can help your evaluation orient itself toward meaningful questions and valid ways to answer them. If you’re taking a participatory approach to program evaluation, you’ll want to involve program participants in the process as soon as it is meaningful to do so.

Community Partners: Other community partners might be brought on board for their expertise in an area of program development and evaluation or because they also work with particular populations of interest, among many other reasons.

Tips

Keep in mind that involving multiple stakeholders also involves managing and accounting for multiple power-dynamics operating within the evaluation team. It’s important to consider how those dynamics will be negotiated. If you’ve considered answers to the questions above, you can probably already see places where power asymmetries are apparent. For example, you might have funders and program participants involved. Consider in advance how that will be navigated and how the participation of the most marginalized players – likely the program participants – will be both robust and valued. If you cannot ensure meaningful involvement for the participants in the planning process, it might be preferable to engage them in other ways rather than run the risk of tokenizing them or shutting them down through the process.

When you know what the limits and expectations of participation are, you can share them with potential stakeholders and let them make a decision about their involvement. If they do not want to be a full member of the evaluation team, perhaps they will still want to assist with one of the following steps:

- reviewing evaluation plans or tools,

- participating in analysis and interpretation, or

- brainstorming ways to use the data.

Keep in mind that the people you involve in planning the evaluation may or may not be the same people you involve in implementing the evaluation. Consider where people will have the most impact and prioritize their involvement in the steps and decision-making points accordingly. This helps avoid causing fatigue among your evaluation team and also increases the likelihood that people will feel good about their involvement and make meaningful contributions.

When do we start?

Ideally, you will plan the evaluation as you’re planning the program, so that they work seamlessly together and so that evaluation procedures can be built in at all of the most useful times during implementation.

A detailed planning process for both programming and evaluation sets you up for success. Even though it can feel tempting to rush the process – or skip it all together – there is no substitute for a thoughtful planning process. Intentional program and evaluation planning, which might take as long as three to six months before program implementation begins, can involve any or all of the following:

- data collection to determine what the scope of the issue to be addressed

- setting short-, mid-, and long-term goals,

- identifying the best population(s) to reach to address the problem

- identifying and building the program components,

- building community buy-in, and

- designing evaluation systems for the program.

As you move along in planning your evaluation, even before you implement anything, you might notice that aspects of your program plan need to be refined or re-thought. This is one of the ways evaluation can help your work even before you begin collecting data! You will also need to determine whether or not your program is ready to be evaluated (i.e., is it evaluable?). If you do not have a strong program theory or do not have buy-in for evaluation or agreement about program goals among critical stakeholders, it might not yet be appropriate to conduct an evaluation (Davies, 2015). You also need to determine what type of evaluation is most appropriate for it.

As you move along in planning your evaluation, even before you implement anything, you might notice that aspects of your program plan need to be refined or re-thought. This is one of the ways evaluation can help your work even before you begin collecting data! You will also need to determine whether or not your program is ready to be evaluated (i.e., is it evaluable?). If you do not have a strong program theory or do not have buy-in for evaluation or agreement about program goals among critical stakeholders, it might not yet be appropriate to conduct an evaluation (Davies, 2015). You also need to determine what type of evaluation is most appropriate for it.

Sometimes, however, we find ourselves in situations where we have a program or initiative already in progress and realize “Oops! I totally forgot about an evaluation.” If that’s the case, don’t despair. You will still need to go through all of the usual steps for planning an evaluation, but you might have to jump backward a few steps to get caught up and use a few different methods (e.g., a retrospective pre-test) when you begin collecting data so that you can do things like establishing a retrospective baseline.

How do we start?

Almost all forms of evaluation start with the need to describe what will be evaluated, also known as an evaluand. For sexual violence preventionists, this will be some aspect of your prevention programming or perhaps your entire comprehensive program. Describing your program or initiative helps you identify if all of the components are in place and if everything is set up to progress in a meaningful and useful way. It will also help you determine what type of evaluation your program warrants.

If you have pre-determined outcomes, it can also help you make sure that your outcomes and your intervention are strongly related to each other and that your outcomes progress in a logical way. This is also the point at which you can check to make sure the changes you seek are connected to your initiatives through theory or previous research.

A description of the initiative/s to be implemented and evaluated will most likely include the following components:

- A description of the issue or problem you are addressing

- An overall vision for what could be different if you address the issue

- The specific changes you hope your initiatives will help create in the participants, the community, or any other systems

- Tangible supports for program implementation like program budgets, in-kind resources, staff time, and so on

- People you intend to reach directly with your initiative

- Each aspect of your intervention that will be implemented

- Specific steps you will take as part of program implementation

- Any contextual issues that might impact your efforts, either positively or negatively

Several models and processes exist to assist in describing a program; the most well-known model in the nonprofit world is probably the logic model.

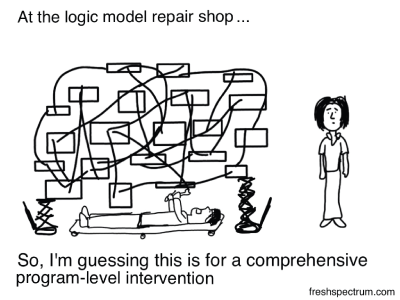

Many people cringe when they hear the term “logic model,” because this tool is much used and often misunderstood and misapplied. Logic models are most appropriate for initiatives that are relatively clear-cut; that is, the initiative should have a clear, research- or theory-based line of reasoning that shows why you have reason to believe that your intervention will lead to your desired outcomes.

Want to see how we learned to love the logic model? Take a look at this brief video for some inspiration:

Look back to the list of components of a program description above. You’ll notice they coincide with logic models’ components:

|

Problem statement |

A description of the issue or problem you are addressing |

|

Vision or Impact |

An overall vision for what could be different if you address the issue |

|

Outcomes (Usually broken out into short-term, mid-term, and long-term) |

The specific changes you hope your initiatives will help create in the participants, the community, or any other systems |

|

Resources/Input |

Tangible supports for program implementation like program budgets, in-kind resources, staff time, and so on |

|

Outputs |

Each aspect of your intervention that will be implemented (e.g., how many media ads you intend to run or how many sessions you will meet with participants) People you intend to reach directly with your initiative |

|

Activities |

Specific steps you will take as part of program implementation (e.g., implementing a curriculum, using a social norms campaign) |

|

External Factors |

Any contextual issues that might impact your efforts, either positively or negatively |

A logic model is a picture of the intersection between your ideal and realistic goals, which is to say that it represents something achievable if you implement what you intend to implement. Generally, the reality of program implementation means that one or more aspects of your plan shift during implementation.

A logic model is a picture of the intersection between your ideal and realistic goals, which is to say that it represents something achievable if you implement what you intend to implement. Generally, the reality of program implementation means that one or more aspects of your plan shift during implementation.

If you are working on an innovative initiative or an initiative without pre-determined outcomes, this model will be of far less utility to you than to someone who is working on an initiative with clear activities and easily-determined, theory-driven or theory-informed outcomes.

More than that, however, linear logic models are not exciting nor are they inspiring, and the process of building them can seem dull, too. This format often feels minimally useful to program staff, if at all.

But there is no reason for your program description to necessarily end up in a linear, logic model format.

Inspiration

Inspiration

Check out this animated program logic video (Tayawa, 2015). Can you identify the components named above?

So, if you need to describe your program – or even if you are required to create a logic model – consider taking an alternate route. Get a group of stakeholders together and use the Program Model Development resource to guide your process. Be creative – map your thinking until you have both an image and words that represents your vision.

If your work is centered on the needs of youth, you might be interested in this NSVRC podcast discussing Vermont’s Askable Adult prevention campaign to learn how research informed their process, the components of the campaign, and how they evaluated their program.

Logic Model Resources

Logic Model Resources

If you’re not familiar with logic models, check out these handy resources that offer guidance on developing and using logic models. These resources all primarily focus on a traditional, linear logic modeling process, but they offer good insight into that process. You can transfer what you learn into a more creative process if that appeals to you.

Developing a Logic Model or Theory of Change (Online Resource) This section of the Community Toolbox provides a useful overview of logic models.

Logic Model Worksheet (PDF, 1 page) This worksheet from VetoViolence, provides a simple fillable form to save and print your Logic Model. Control and Prevention.

Logic Model Workbook (PDF, 25 pages) This workbook from Innovation Network, Inc. walks you through each component of a logic model and includes templates and worksheets to help you develop your own model.

Logic Model Development Guide (PDF, 71 pages) This guide from the W.K. Kellogg Foundation provides extensive guidance on logic model development and using logic models for evaluation. It includes exercises to help you in your development.

Using Logic Models for Planning Primary Prevention Programs (Online Presentation, 26:52 minutes) This presentation describes the value of logic models in planning a violence against women primary prevention effort. It starts by looking at how logic models build on existing strengths, and when to use a logic model. The presentation then reviews logic model basics, explaining how logic models are a simple series of questions and exploring the steps in creating logic models.

References

Davies, R. (2015). Evaluability assessment. Retrieved from BetterEvaluation: http://betterevaluation.org/themes/evaluability_assessment

| Back | Index | Next |